摘要

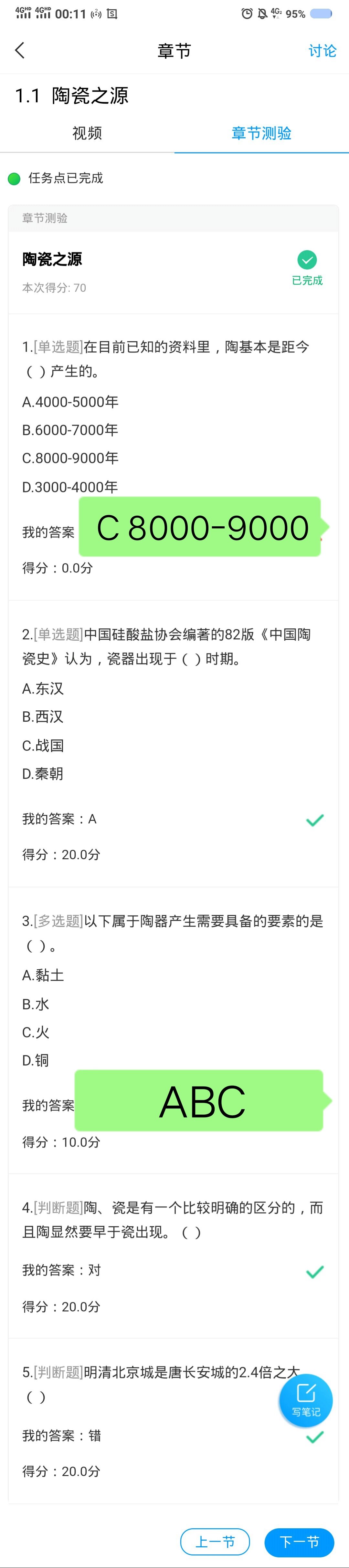

转自学习通答案录入:爱可可-爱生活

Introduction

Neural networks are a different breed of models compared to the supervised machine learning algorithms. Why do I say so? There are multiple reasons for that, but the most prominent is the cost of running algorithms on the hardware.

In today’s world, RAM on a machine is cheap and is available in plenty. You need hundreds of GBs of RAM to run a super complex supervised machine learning problem – it can be yours for a little investment / rent. On the other hand, access to GPUs is not that cheap. You need access to hundred GB VRAM on GPUs – it won’t be straight forward and would involve significant costs.

Now, that may change in future. But for now, it means that we have to be smarter about the way we use our resources in solving Deep Learning problems. Especially so, when we try to solve complex real life problems on areas like image and voice recognition. Once you have a few hidden layers in your model, adding another layer of hidden layer would need immense resources.

Thankfully, there is something called “Transfer Learning” which enables us to use pre-trained models from other people by making small changes. In this article, I am going to tell how we can use pre-trained models to accelerate our solutions.

Note– This article assumes basic familiarity with Neural networks and deep learning. If you are new to deep learning, I would strongly recommend that you read the following articles first:

What is deep learning and why is it getting so much attention?

Deep Learning vs. Machine Learning – the essential differences you need to know!

25 Must Know Terms & concepts for Beginners in Deep Learning

Why are GPUs necessary for training Deep Learning models?

Table of Contents

What is transfer learning?

What is a Pre-trained Model?

Why would we use pre-trained models? – A real life example

How can I use pre-trained models?

Extract Features

Fine tune the model

Ways to fine tune your model

Use the pre-trained model for identifying digits

Retraining the output dense layers only

Freeze the weights of first few layers

What is transfer learning?

Let us start with developing an intuition for transfer learning. Let us understand from a simple teacher – student analogy.

A teacher has years of experience in the particular topic he/she teaches. With all this accumulated information, the lectures that students get is a concise and brief overview of the topic. So it can be seen as a “transfer” of information from the learned to a novice.

Keeping in mind this analogy, we compare this to neural network. A neural network is trained on a data. This network gains knowledge from this data, which is compiled as “weights” of the network. These weights can be extracted and then transferred to any other neural network. Instead of training the other neural network from scratch, we “transfer”the learned features.

Now, let us reflect on the importance of transfer learning by relating to our evolution. And what better way than to use transfer learning for this! So I am picking on a concept touched on by Tim Urban from one of his recent articles on waitbutwhy.com

Tim explains that before language was invented, every generation of humans had to re-invent the knowledge for themselves and this is how knowledge growth was happening from one generation to other:

Then, we invented language! A way to transfer learning from one generation to another and this is what happened over same time frame:

Isn’t it phenomenal and super empowering? So, transfer learning by passing on weights is equivalent of language used to disseminate knowledge over generations in human evolution.

链接学习通答案录入: https://www.analyticsvidhya.com/blog/2017/06/transfer-learning-the-art-of-fine-tuning-a-pre-trained-model/

原文链接学习通答案录入:

https://weibo.com/1402400261/F5Y88scY0?from=page_1005051402400261_profile&wvr=6&mod=weibotime&type=comment#_rnd1496399838410

↓↓↓

标签: #学习通答案录入

评论列表